The Team

Andreas Addison

Civic Innovator

City of Richmond

Danny Avula

Deputy Director

Richmond City Health District

Ben Golder

Fellow

Designer

Sam Matthews

Fellow

Web Developer

Emma Smithayer

Fellow

Software Engineer

The Richmond Code for America fellowship focused on healthcare access for people in poverty. During our initial research phase, we heard from patients, case managers, and screening staff that the eligibility screening process at safety-net health services is often a significant barrier to care. Patients struggle to find the necessary income documentation (such as pay stubs or tax returns), and face long waits to fill out applications or receive responses. They often don’t understand why some people are accepted and others are denied. And if they need care from more than one organization, they usually have to start the process from scratch at each one. For many people, it’s easier to go to the emergency room when they get sick.

We wanted to find ways to make this process more efficient so that patients can get into care faster, and we wanted to make it less confusing and a better experience for everyone involved. We developed a web application called Quickscreen, which collects the information currently found on paper eligibility forms, so that applications can be filled out and submitted anywhere. With patients’ permission, their online profile is accessible to staff at partner organizations in Richmond, so that they won’t have to provide the same information repeatedly. The software also estimates a patient’s eligibility at various organizations and tracks referrals and screening results over time.

After extensive tests with mock data, the software is ready for a pilot with a few dozen real patients. At scale, we think Quickscreen will get patients through the screening process more quickly, save staff time, and improve patient trust in the healthcare system.

Andreas Addison

Civic Innovator

City of Richmond

Danny Avula

Deputy Director

Richmond City Health District

Ben Golder

Fellow

Designer

Sam Matthews

Fellow

Web Developer

Emma Smithayer

Fellow

Software Engineer

A key part of Code for America’s approach is building with communities, not for them. In February, we talked with over a hundred people, including clinicians, social workers, community health workers, hospital and clinic administrators, technical staff at Richmond City Health District and the City of Richmond, academics, leaders at health-related nonprofits, and community members who use safety-net health services. We asked them about their experiences with safety-net healthcare in Richmond, their hopes and challenges, and how technology helps or hinders them.

Several themes emerged, which we discussed in our February blog post.

We decided to focus on eligibility screening for several reasons:

Other projects that we prototyped but didn’t fully develop include:

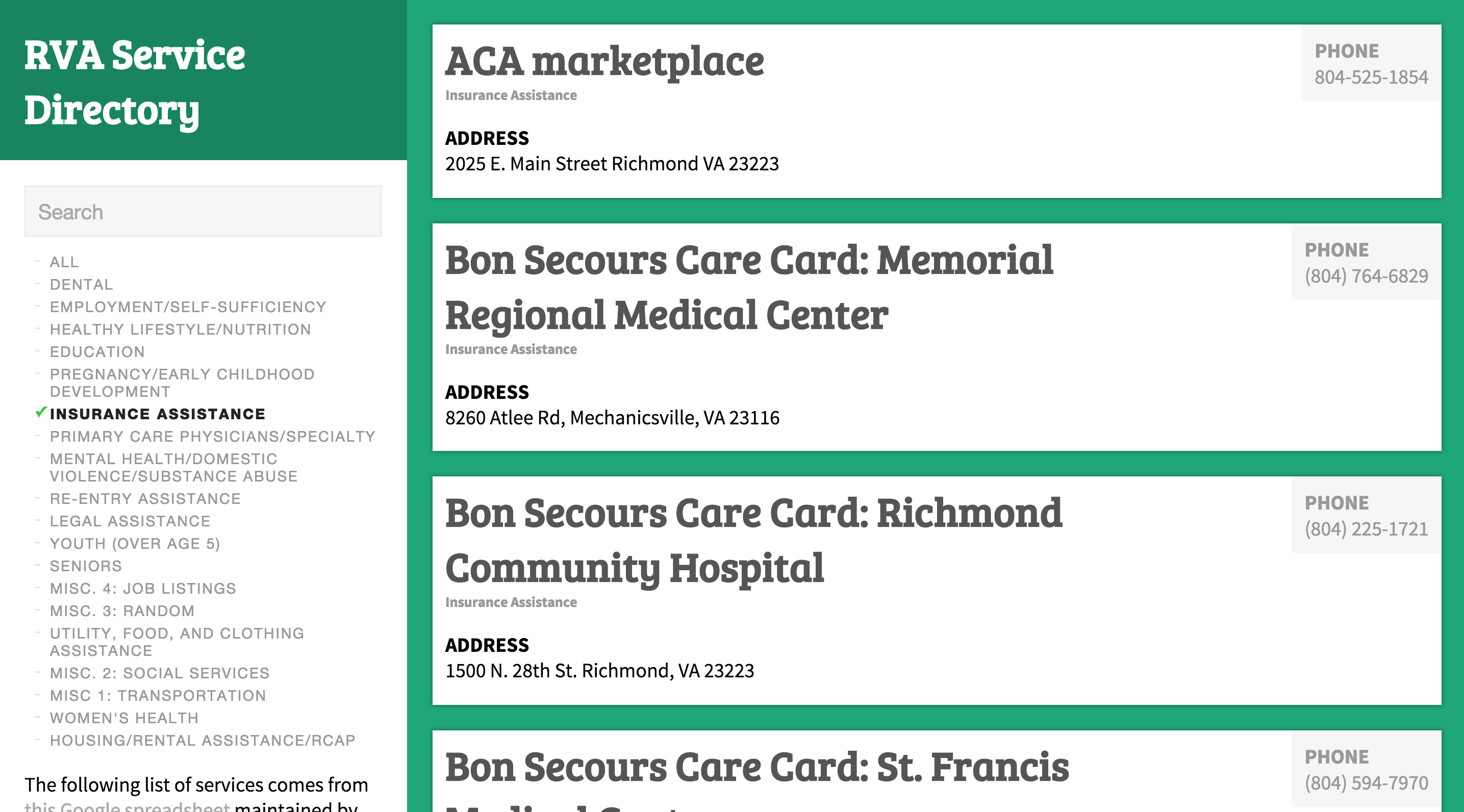

The single most requested tool in our February meetings was an up-to-date, searchable list of all available services for low-income people in Richmond. Because services change so quickly, existing resources like the statewide 211 system tend to have incomplete or out-of-date information. Some case managers we met curated their own lists, but those aren’t publicly available.

Amber Haley and her team at the Center on Society and Health at VCU maintain a detailed Google Spreadsheet of resources in the city that includes services offered, contact information, and hours of operation. In March, we developed a searchable web-based list of services in Richmond that uses Amber’s spreadsheet as the database. This means that when she updates the spreadsheet, it automatically updates the website as well--no need to enter data into a separate system!

However, there are quite a few existing companies and nonprofits hard at work on this problem, and we thought we were unlikely to add much value. The last thing we wanted to do was create another out-of-date list of resources (see this comic).

Since a patient’s eligibility is largely determined by his income as a percentage of the Federal Poverty Level, our projects require calculating this number from the patient’s income and household size. The government adjusts the FPL formula annually, which means any software that does this calculation has to be updated accordingly. To make this easier, we built the FPL API, which offloads the calculations to a single location that can be used by an unlimited number of projects. The code is open-source, so anyone can use it for their own application. We used this to build a lightweight calculator that staff can use to determine where a patient falls on the Virginia Department of Health’s sliding fee scale.

Community health workers in Richmond told us that texting is a convenient way for clients to ask them questions, but they don’t use it much because giving out their personal phone numbers would mean receiving large numbers of texts at all hours. As an experiment, we set up a third party web application called FrontApp for one CHW, Shikita Taylor. It allows her to send and receive texts on Front’s website instead of her phone. The software also lets her set up auto-replies when she’s out of the office and easily copy-paste answers to common questions. If multiple CHWs are working together, they could give clients a single number and use Front to assign texts to the most appropriate staff member.

Richmond’s network of low-cost clinics and care programs do great work caring for patients without insurance. But both patients and providers told us that the time and effort required to get an appointment discourages many people from using those services. Funding and clinical capacity are certainly part of the reason for wait times, but we also heard that the application process itself was often slow and frustrating for everyone involved. In order to focus limited resources on those who need it most, almost all safety net providers have an eligibility screening process that determines whether a patient is eligible for free or discounted care (the exception is Care-A-Van, a Bon Secours mobile clinic program). Often, the organization’s funders shape the eligibility criteria and conduct audits, so it’s important to document the decision.

Applications ask for four main types of information:

Income: Providers ask for the patient’s household size and household income and use this to calculate her income as a percentage of the Federal Poverty Level (FPL), then compare this to the organization’s cut-off or sliding scale. Asking someone for her household size and income sounds like it should be simple, but there are sometimes dozens of related questions.

Income documentation: Screening usually requires patients to show documents that prove their income. This is really the most fundamental requirement (a Code for America team working on an alternative food stamp application process in California decided to eliminate income questions entirely, since they can infer the answers from the income documentation). Pay stubs are the most common choice, but they can be tricky. Sometimes patients haven’t kept two months’ worth of pay stubs because they didn’t think they would need them. Some people don’t have pay stubs because they’re paid under the table. Others work inconsistent hours, so their pay varies widely. Patients can also ask their employers for a letter stating their wages, but some employers will refuse, especially if they hired the patient illegally. If you don’t have any income, you often need a notarized letter from someone who supports you.

Insurance eligibility: Providers want to encourage patients who are eligible for insurance to use those benefits, so it’s important to check whether a patient currently has insurance or might qualify for government programs. The requirements can be so complicated, though, that this is not always an easy question to answer.

Demographic information: Some forms also include demographic questions for grant application purposes. These often vary widely across organizations.

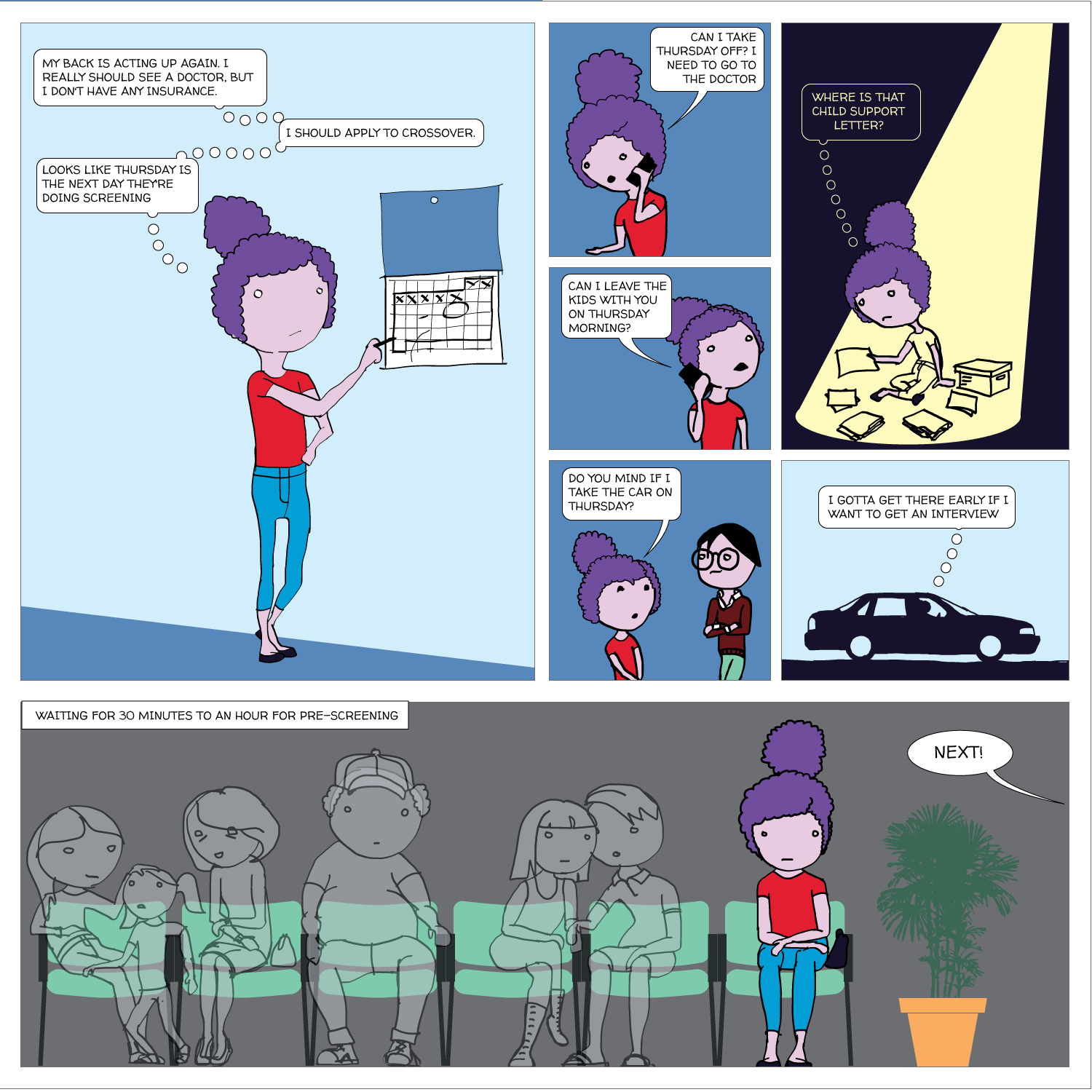

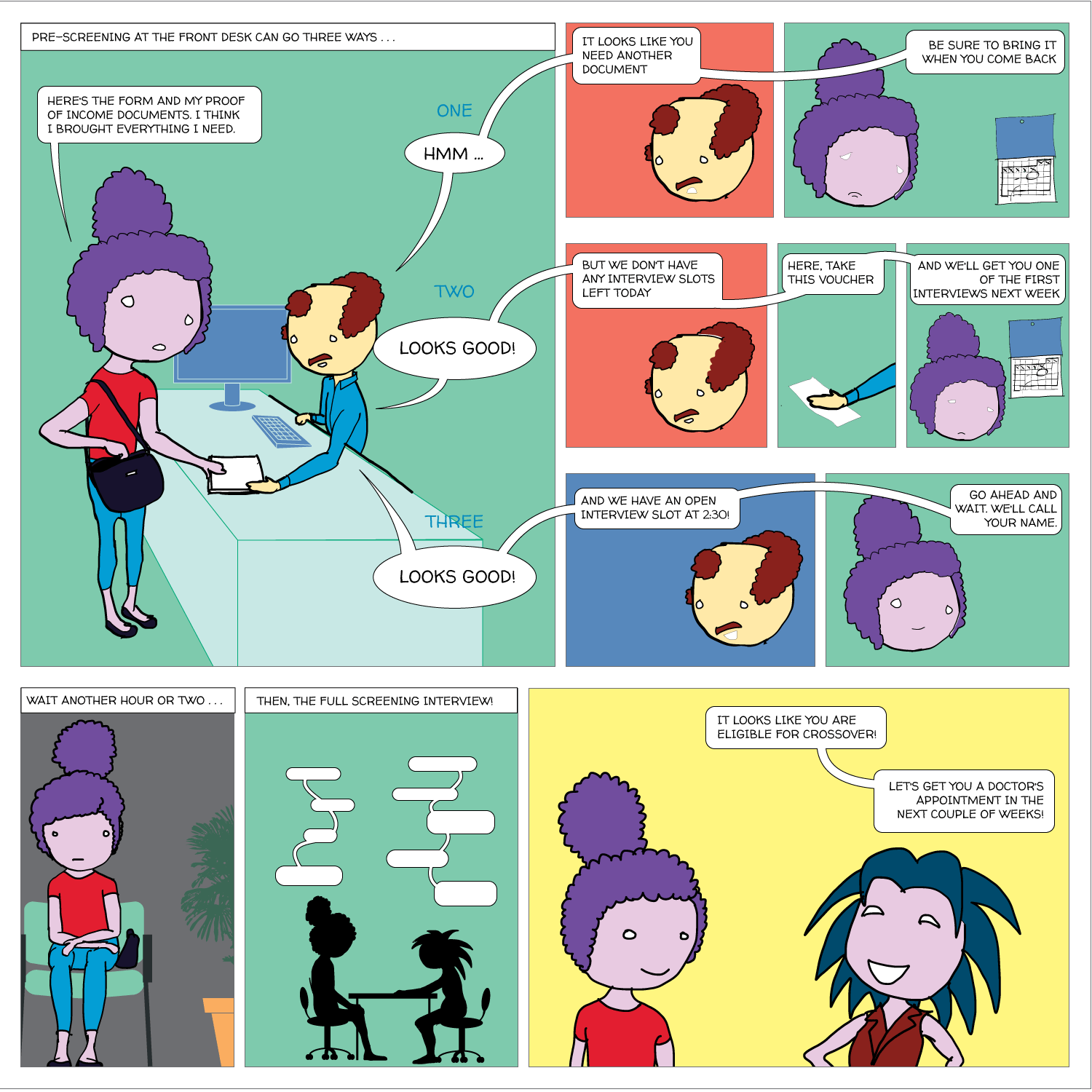

The screening process can be slow and confusing. No two organizations screen in exactly the same way, but here’s an example of a screening process at one primary care clinic:

It’s also redundant. If patients visit another provider, they usually have to go through a separate screening, even though the applications ask for most of the same information. A few providers share a form, but most don’t. Here’s an example:

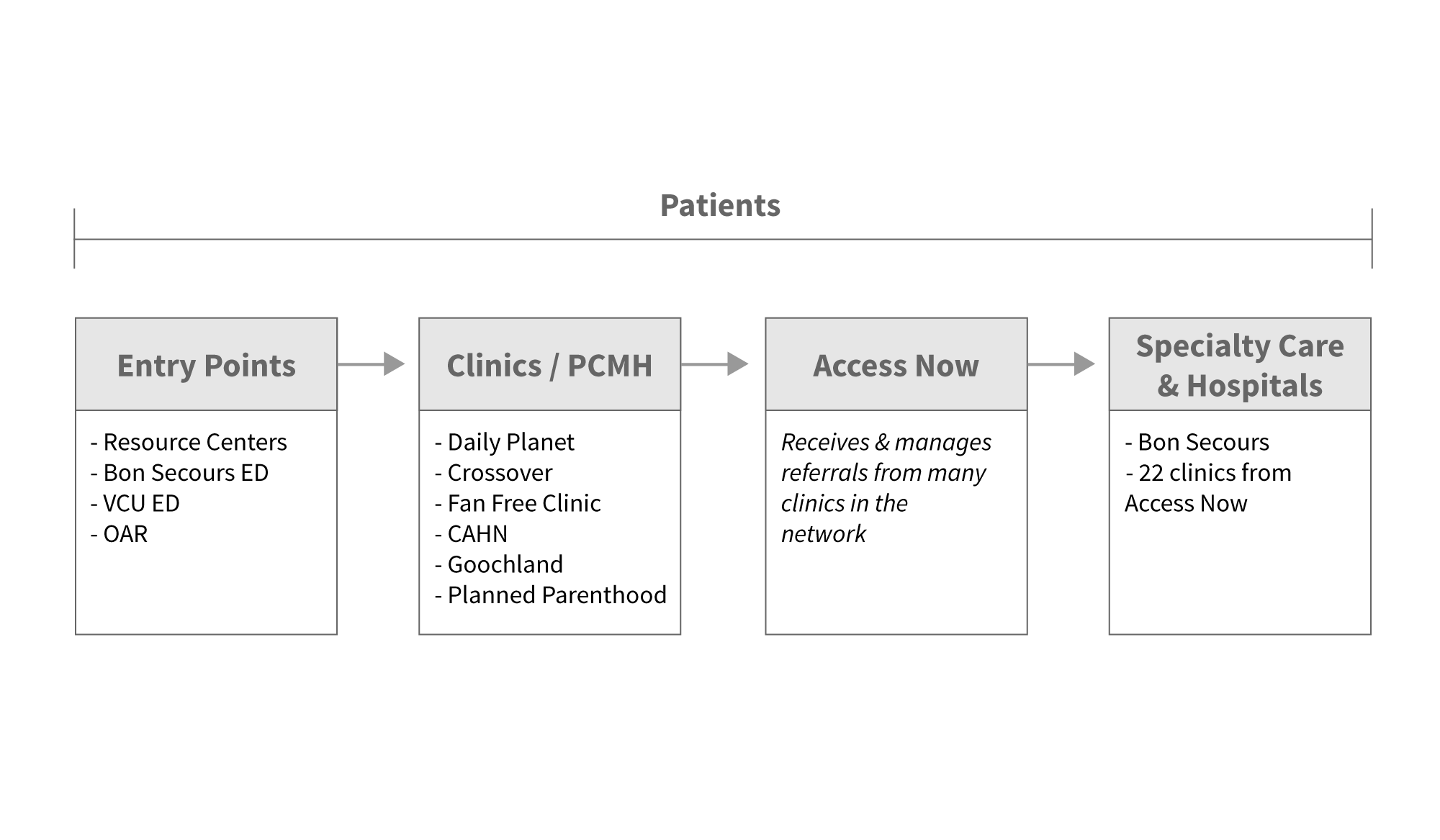

Nurses at the Richmond City Health District Resource Centers offer pap smears and other basic services for a sliding scale fee. Patients must submit proof of income to be eligible for lower fees. If an uninsured patient has no doctor, the patient might also be referred to Daily Planet.

Daily Planet screens new patients to determine a sliding scale fee for services. At Daily Planet, an uninsured patient can access oral and eye care, as well as primary care. For specialty referrals, such as orthopedic surgery, a patient would be referred to Access Now.

Access Now maintains a network of volunteer physicians who offer specialty care for the uninsured, such as orthopedists, gynecologists, and gastroenterologists. Before connecting patients with these physicians, Access Now requires screening to verify that the patient is uninsured and low-income.

If the specialty care requires using hospital facilities for surgery, CT scans, or MRIs, the hospital will screen the patient once again for Medicaid, Medicare, and the hospital’s own charity care programs.

For care providers, the status quo means spending a great deal of staff time on screening that could otherwise be spent on something more directly related to patient care. For patients, it means delays getting into care (which can mean that the ER is a better option). And the confusion and frustration damages patients’ trust in the healthcare system. Community members think the application process is confusing and demoralizing, and don't understand why some people are denied.

We built a web application that we think can improve this process. Here’s how it works:

Online applications

Patient profiles contain the information currently collected on paper forms, and allow users to upload images of documents like pay stubs.

Eligibility estimates

We use each organization’s eligibility criteria to estimate whether the patient is likely to be eligible for care at each one, and where he might fall on a sliding scale.

Pre-screening

The pre-screening tool allows patients to answer the most basic eligibility questions and tells them where they’re likely to qualify for services. It can help the patient figure out whether it’s worth the time to fill out a complete application.

Share screening data

With the patient’s permission, their information is shared with other organizations in the network so she doesn’t have to provide it repeatedly.

Track referrals and screening results over time

This view allows users to see any past referrals or screening results for a patient.

Varying permission levels

The application has different permissions for patients (who can see only their own data), staff (who see all patients), and administrators (who can adjust settings for an organization).

Privacy & HIPAA compliance

We use Aptible to help meet technical HIPAA requirements. All patients sign a consent form before their information is entered into the application, and partner organizations sign a Memorandum of Understanding.

Mobile-friendly

The application also works on smartphones or tablets. For example, community health workers could use it while visiting patients in their homes.

Export data

A patient’s information can be exported in PDF or CSV format.

Translations

The application has a full Spanish version, and technical infrastructure that makes it easy to add other languages later.

Despite all of our research, what we built is based on our assumptions, both explicit and implicit, about what users want and need. Only those users can show us which are right and which are wrong, and we wanted to get that feedback early and often so that we could make sure what we built works for them. From May onward, we spent our trips to Richmond showing our prototype application to potential users and letting them navigate through it without too much direction, asking them about their expectations and thoughts at each step.

We focused on users in three different roles.

The staff who review applications and determine whether a patient is eligible for care or where she falls on a sliding scale.

People who aren’t screeners, but help patients apply for health services. They include case managers and community health workers.

The leadership of the organizations we’re working with. They probably won’t use the application frequently themselves, but they can give us feedback on whether the application fits their organization’s larger goals and meets their funders’ requirements.

We also built a patient-facing version of the application, which allows the user to view only her own profile. Unlike the staff version, it hasn't been user tested extensively, but it could be developed further in the future.

Much of the user feedback focuses on UI details. For example, it doesn’t make sense to ask about the spouse’s employment status if the patient isn’t married. Or a dropdown for phone number type (home, cell, work, etc) would be easier than a text field. We fixed those issues as quickly as possible to eliminate potential pain points. But most important were the big questions: What would you use this for? How does it help you meet your goals? What would prevent you from using the software today? What would make you want to start using it immediately?

Here are a few of the lessons that shaped our thinking the most:

People are often nervous about projects that involve sharing health data, because they’re concerned about violating HIPAA. We found that this is a hurdle, but not an insurmountable one. We developed a business associate agreement and a patient consent form with the help of a HIPAA-specialist lawyer, and worked with a company called Aptible that specializes in HIPAA-compliant web applications to make sure we have all the necessary security features.

When we showed our prototype to potential users, we realized that their biggest barrier to using it was the potential for duplicate data entry. Some clinics typically have the patient fill out a paper form in the office and then a staff member types all the information into their electronic health record system. If we asked those staff members to then enter the same data into our application as well, we’d be adding a lot of extra work, so it’s important to find ways to either integrate with their existing software or change workflows to avoid duplication.

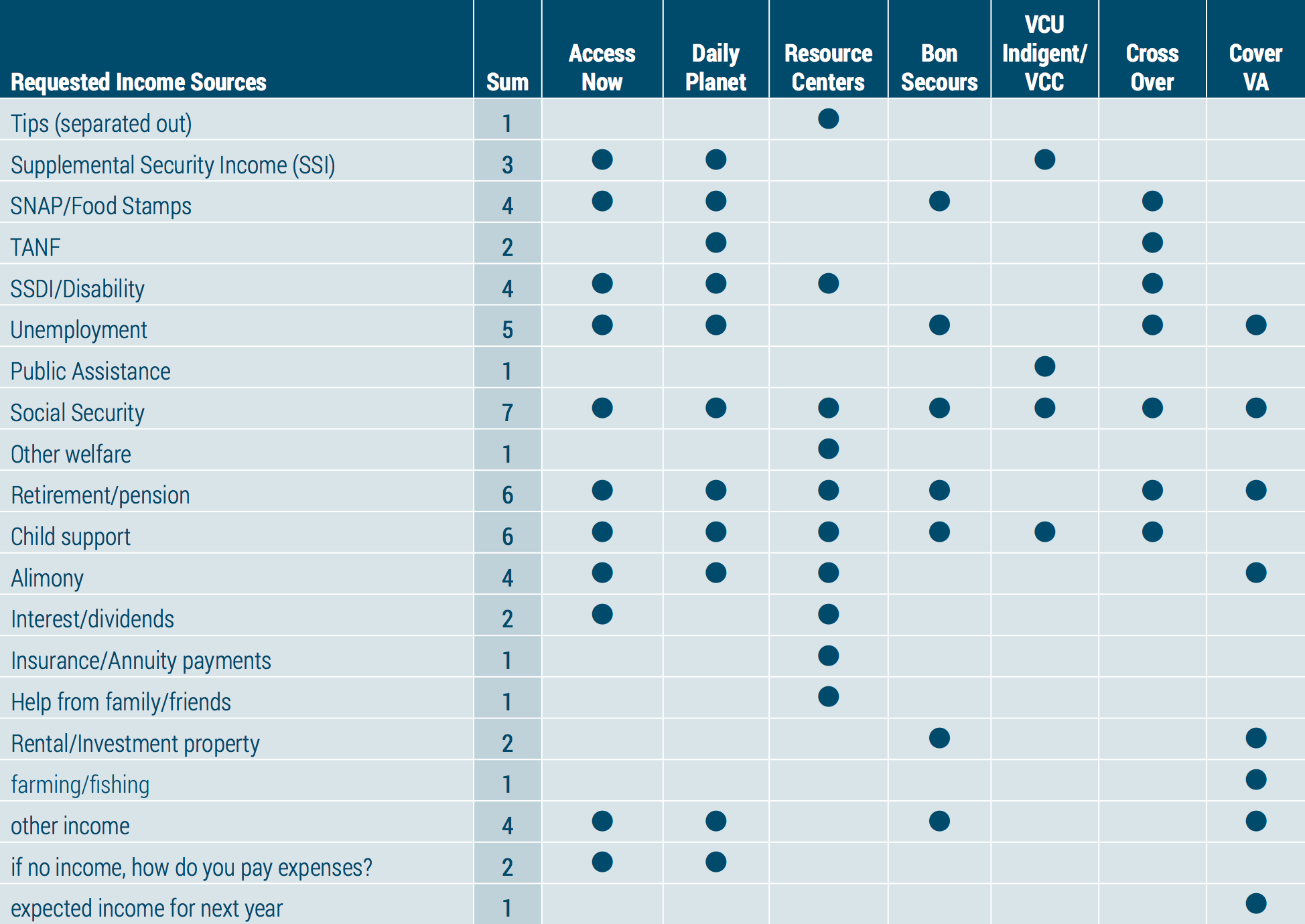

Application forms vary widely across organizations. For example, this chart shows all the different types of income that organizations might ask about.

Some forms ask about food stamps, some ask about social security, one asks you to separate out any income earned as tips. There are hundreds of unique questions, but they’re all really getting at the same thing. As we tried to build a web form that asked all the necessary questions, we talked to our partners about whether all of these questions are really necessary and whether there are ways we could make the forms more similar.

Community health workers and social workers in Richmond already spend a lot of time referring patients to care programs, but right now, they usually can’t do much more than hand out fliers and encourage the patient to apply. Once it’s online, they can actually get the process started. Those users were really interested in figuring out whether a referral was successful. Did the organization receive the application, and is the patient eligible? And care providers are really interested in knowing where else a patient has been seen, so that they can make sure they’re not duplicating care. This feedback led us to build the referral and screening history page.

Users were consistently excited by the eligibility estimates because they don't currently have an easy way to compare eligibility criteria across organizations. We think this is really valuable for people making referrals, but it’s only useful if the information can be kept up to date.

After dozens of user research sessions, we’ve received a great deal of qualitative user feedback on both the application and our process. Here are a few of the highlights.

As the application scales, key metrics might include:

After months of testing the software with mock data and receiving very positive feedback from users, it’s ready for a pilot with real patients. We suggest a pilot that lasts three to four weeks and involves several dozen patients. Start with three organizations (likely the RCHD Resource Centers, Daily Planet, and Access Now) to test the chain of referrals from an entry point to primary care to specialty care.

Ideally, Quickscreen would be maintained and developed further by a digital agency with experience not only engineering web applications, but also driving design with user research. A “project owner”, ideally an organization already involved in safety-net healthcare, would handle the contract with the developer and maintain communication with the other organizations in the network. We’re currently exploring options for each of these roles, as well as potential funding sources.

As our partners in Richmond continue to develop this work, we offer the following recommendations.

We’re incredibly grateful to have worked with so many dedicated, talented people who took the time to teach us about their work, answer our unending questions, and test our buggy prototypes. Thanks for a fun, inspiring, and educational year!

A special thanks to the following: Albert Walker, Alex Chamberlain, Amber Haley, Amelia Goldsmith, Amy Popovich, Aneesh Chopra, Barbara Harding, Bill Misturini, Bryan Tunstall, Camila Borja, Cynthia Newbille, Damon Jiggetts, Dana Wiggins, Dave Belde, Deepak Madala, Deven McGraw, Elaine Summerfield, Elizabeth Wong, Gwen Creighton, Helen Gonzalez, Helena Semeraro, Jameika Sampson, Jeff Cribbs, John Baumann, John Estes, Julie Bondy, Kara Weiland, Kathryn Zapach, Kim Lewis, Kimyatta Moses, Kysha Washington, Leslie Gibson, Marilyn Nicol, Mason Dimick, Maureen Neal, Nancy Stutts, Pamela Cole, Paulette Edwards, Paulette McElwain, Robert Belfort, Robin Mullet, Ryan Raisig, Sanchita Dasgupta, Sandy Fields, Sara Conlon, Sarah Bennett, Sarah Scarbrough, Sean O’Brien, Shenee McCray, Sheryl Garland, Shikita Taylor, Tammy Toler, Teresa Kimm, Trina Jaehnigen, Velvet Mangum-Holmes, Veronica Blount